You can't really imagine a modern application without a data persistence layer. Either a relational database, a document storage or a serverless key-value solution, you're reading and writing your data to a database. Let's explore, how you can organize the layer for data persistence on the example of a Nest.js app writing data to DynamoDB

First try

A simple read request looks like the following:

const client = new DynamoDBClient({ region: 'us-east-1' });

const input = {

TableName: 'my-table',

Key: {

Id: { S: '123' },

}

};

const command = new GetItemCommand(input);

const response = await client.send(command);

if (response.Item) {

return unmarshall(response.Item);

} else {

return undefined;

}So once you need to read some data, you can just write this code and get a result. Soon, you will discover that you need to read the data by partition key in multiple places, so the natural desire would be to create some dynamodb.ts file and put a slightly generalised version there:

const region = process.env.AWS_REGION;

const client = new DynamoDBClient({ region: region });

const readAsync = async (tableName, key) => {

const input = {

TableName: tableName,

Key: { Id: { S: key } }

};

const command = new GetItemCommand(input);

const response = await client.send(command);

if (response.Item) {

return unmarshall(response.Item);

} else {

return undefined;

}

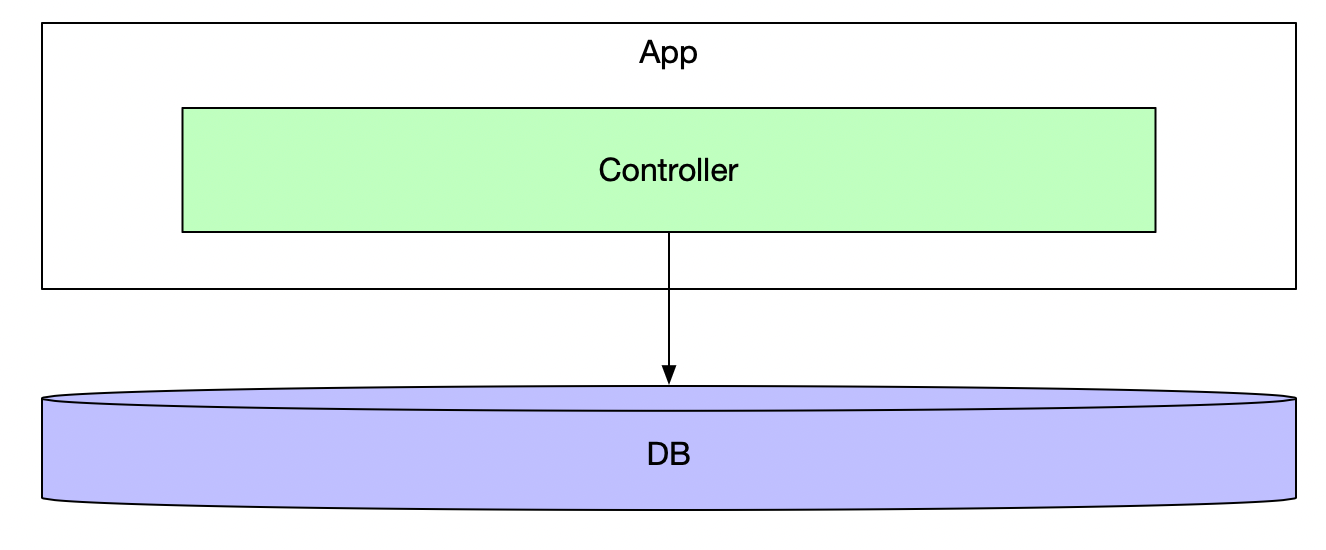

};After that you can import the function and call it at the controller level:

Despite making some unifications, the code fragment have couple of maintainability problems:

- There is a single region definition read from the environmental variables

- You can not easily replace the implementation in tests, instead relying on mock implementations

Class-based approach

So instead of a file with some functions we want to introduce a configurable class:

export class DynamoDbDriver {

private client: DynamoDBClient;

constructor(region: string) {

this.client = new DynamoDBClient({ region });

}

...

}Now you can pass the driver as a dependency.

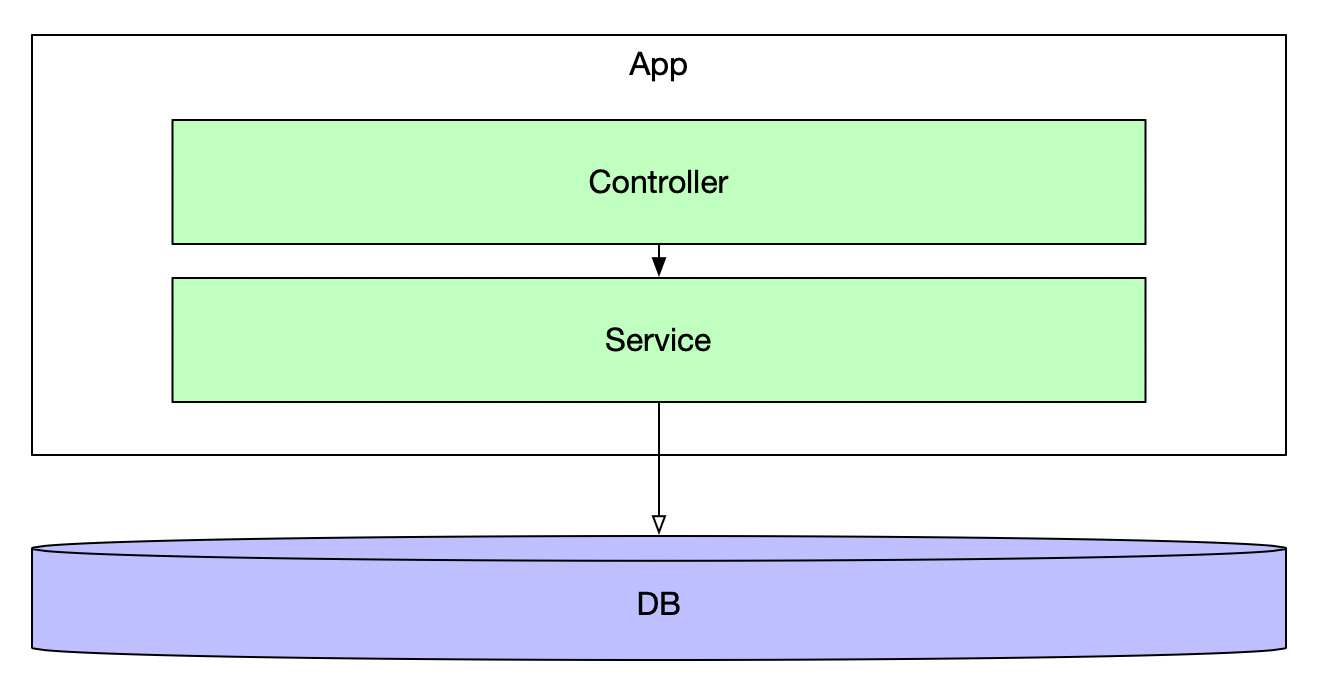

At the same time calling the database even through a database class is a bad idea, as the controller's responsibility is to get a request and send a response, and not handling the response itself. So we should introduce a service layer where we can perform business logic.

Meanwhile, if you consider switching the database later, you can even introduce an interface for an easier migration later:

interface DbDriver {

readAsync(tableName: string, key: string)

writeAsync(tableName: string, key: string, params: any)

...

}

export class DynamoDbDriver implements DbDriver {

...

}Dependency Injection

And finally we definitely want to leverage DI capabilities of Nest.js and inject the DBDriver instance into our services:

@Injectable()

export class DynamoDbDriver implements DbDriver {

...

}

@Injectable()

export default class ProfileService {

constructor(private readonly dbDriver: DynamoDbDriver) {

}

}Now you have the flexibility of configuring and injecting the DBDriver instances and ability to write the unit tests of your services!